High-End Music Server/Player

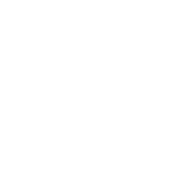

A music signal is two-dimensional – it has an amplitude dimension and a time dimension. We only hear sound when the amplitude oscillates over time. The greater the amplitude, the louder the sound. The faster the amplitude oscillates, the higher the pitch of the sound. A sound system should aim to accurately reproduce both dimensions.

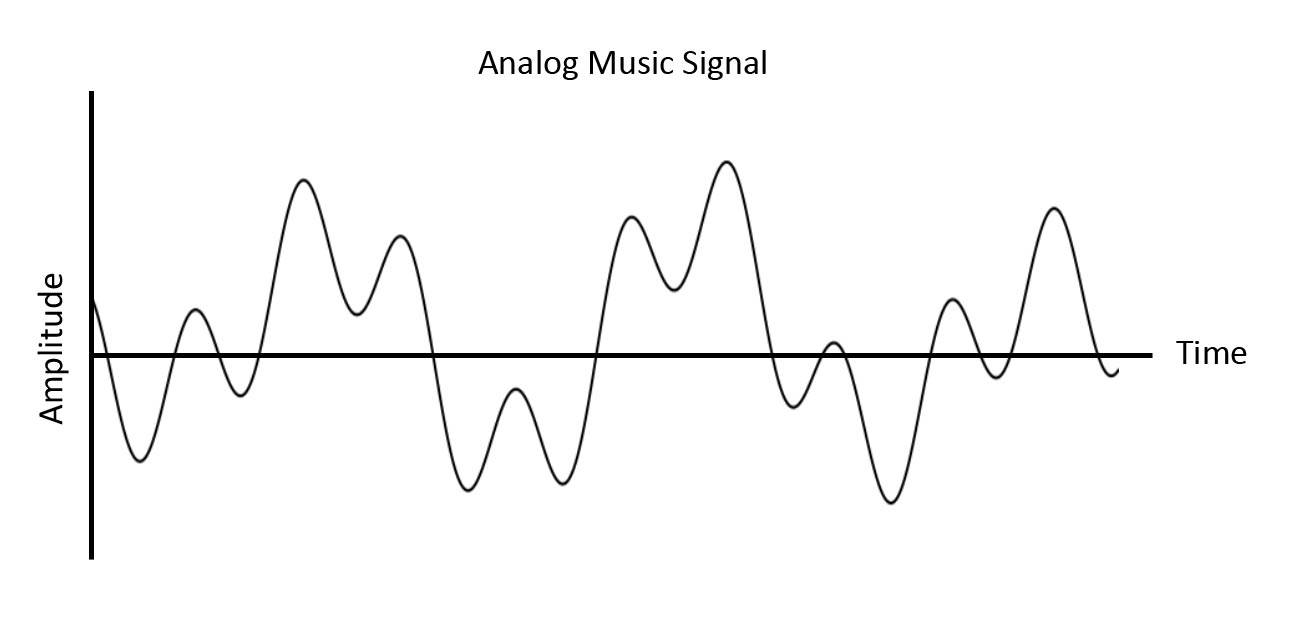

Encoding an analog signal as a digital signal involves sampling amplitude measurements at regular time intervals (at the sample rate). While the black digital ‘staircase’ line below looks very different from the red actual signal line, the DAC stage converts the data back to a smooth wave.

The point to understand is that the data in the digital music file represents only the amplitude measurements, and there is no equivalent recording of timing data because the file simply states the sample rate in a header, so that the playback process knows the rate to play it at.

Because the bitrate is finite, the digital audio recording process will always require trade-offs that result in small errors. But this is, arguably, offset by the resilience of a digital file when storing or transmitting it, when compared to an analog signal which inevitably and irrecoverably degrades with every processing or transmission step.

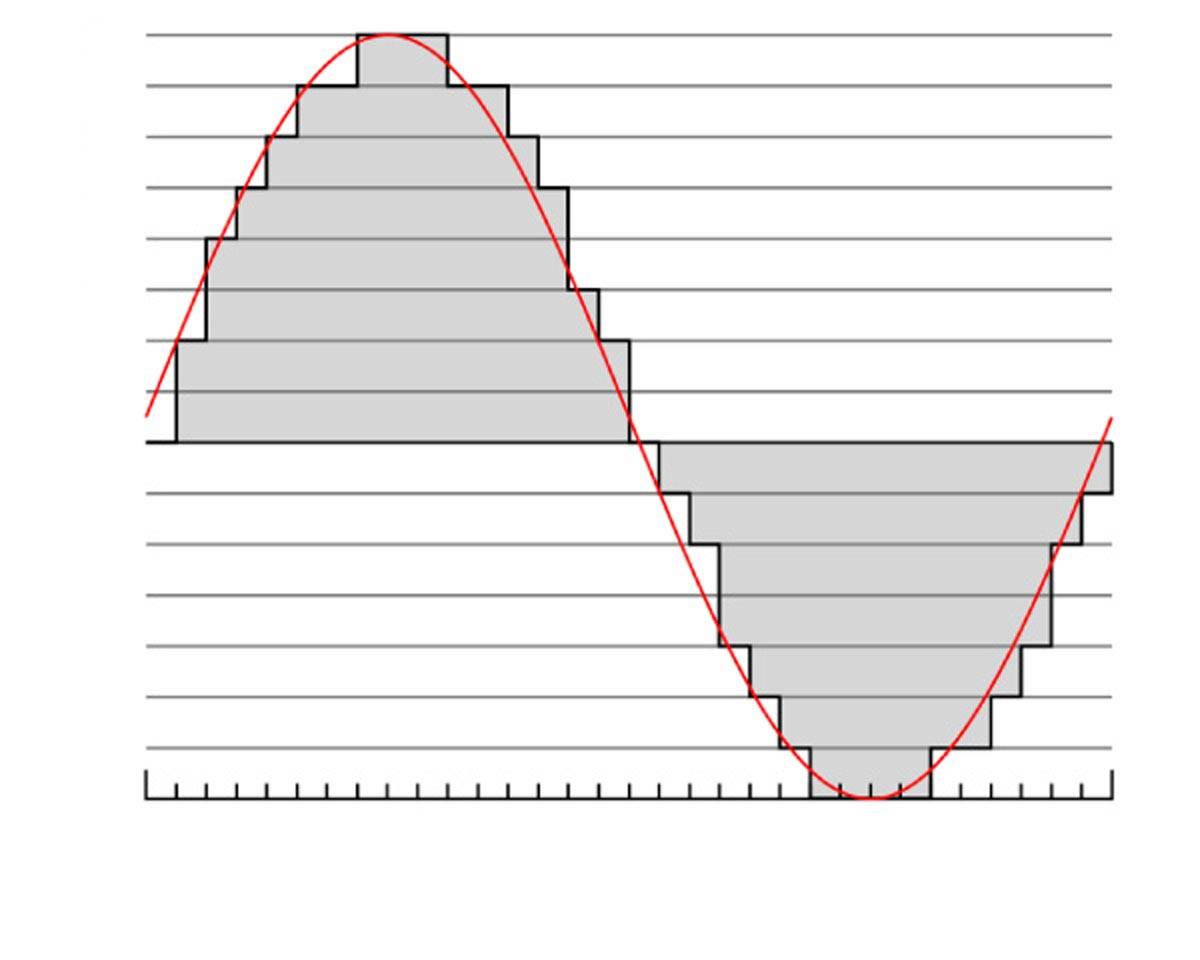

Binary data (using multiple 1s and 0s to record each amplitude measurement) is easily transmitted from one point to another without distorting the information. The binary 1s and 0s can be represented using high (for a 1) and low (for a 0) levels of voltage or light (or even holes in a punch card or different magnetic charges, etc).

The key point is that the voltage level or light intensity does not have to be perfectly accurate. Mild to moderate levels of distortion will not cause a high value (1) to be misread as a low value (0), or vice versa. In the image below, any signal over the high threshold is read as a 1, and any signal below the low threshold is read as a 0. The image below illustrates how it takes extreme signal distortion to create a bit error.

High-bandwidth data networks can easily move digital data files quickly over long distances without any distortion of the information contained in them, provided the network is designed to keep the signal level above the noise level, and to simply re-send the occasional packet that arrives corrupted at the other end. This works very well for transmitting web pages, emails, spreadsheets, etc. It also works for transferring video and music files. But the situation is different when you are streaming video and music files, because timing becomes a factor.

IT IS ABOUT TIME

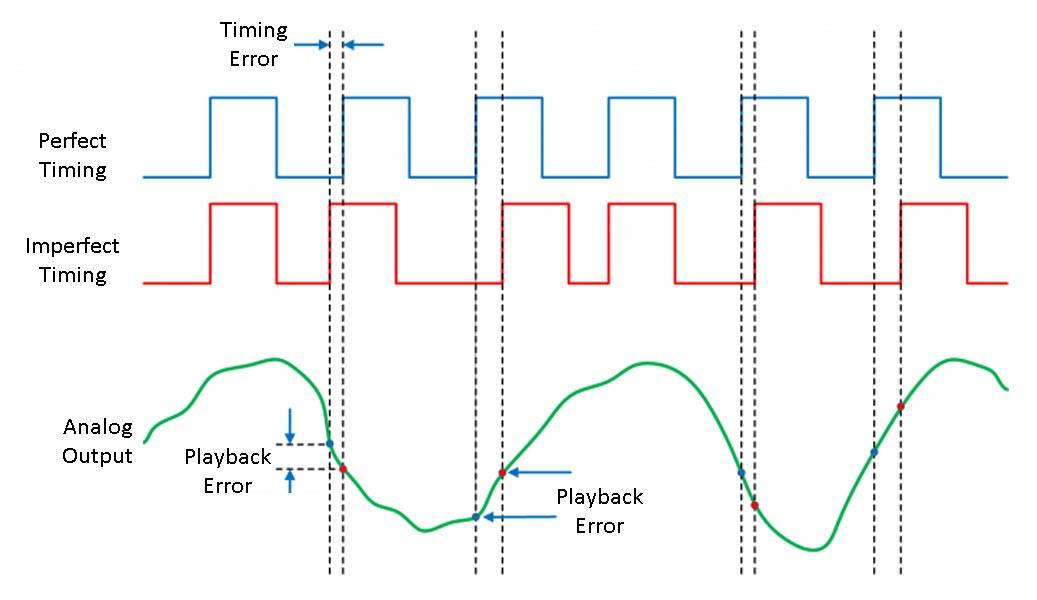

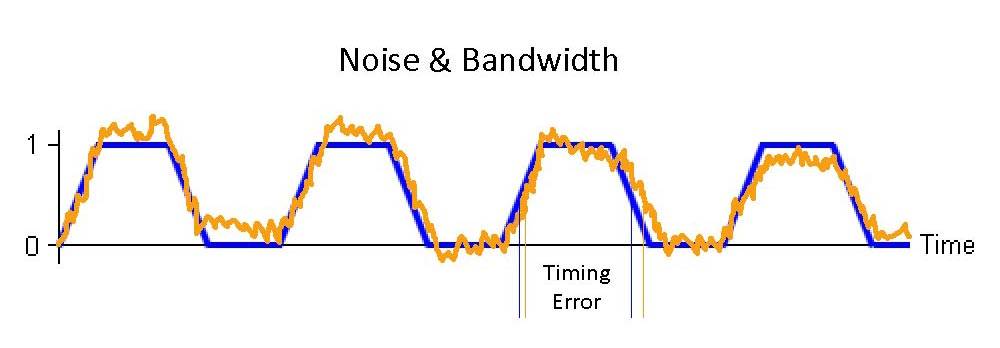

The digital audio signal that enters the DAC chip needs to have accurate and unambiguous timing, or the resulting analog audio signal will be distorted. Ideally, it would be a perfect square wave, as in the blue line below. If the timing is distorted then the resultant analog audio will also be distorted.

This means that playing a music file is a different problem from just transmitting a file from one place to another. Accurately playing a digital music file means playing the amplitude dimension accurately, with accurate timing. The key point here is that the Achilles heel of digital audio is that sound quality is hyper-sensitive to the timing errors that are inevitable in the transmission of digital data.

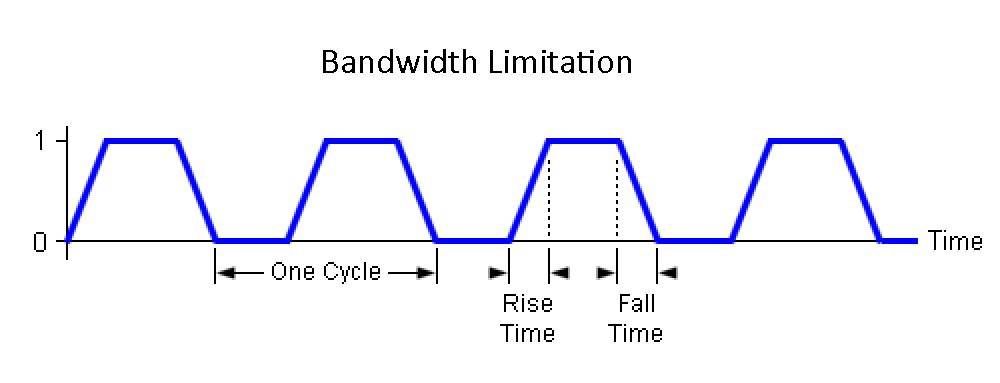

The challenge for a Music Server/Player is to send a perfectly-timed square wave signal of the music’s digital file. But it is impossible to produce a perfect square wave because of the real-world presence of bandwidth limitations and noise interference. A perfect square wave would have infinite bandwidth so that the signal could be at its lowest value and at its highest value in the same instant when transitioning between a 0 and a 1.

In reality, bandwidth is limited and so there will be a rise time and a fall time.

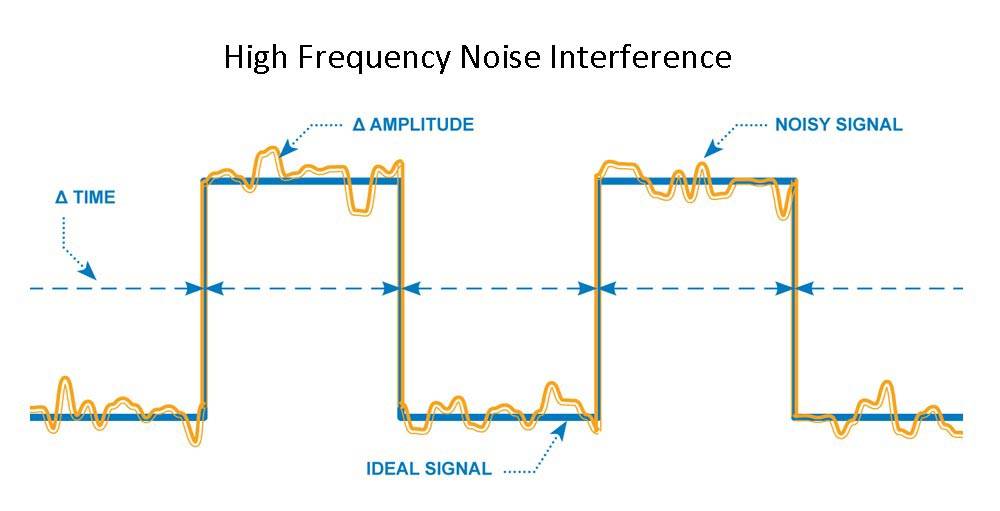

There will also be some noise interference with the signal from a variety of sources, as always occurs in any electronic environment. Noise above the bitrate adds the wiggles shown in the graph below.

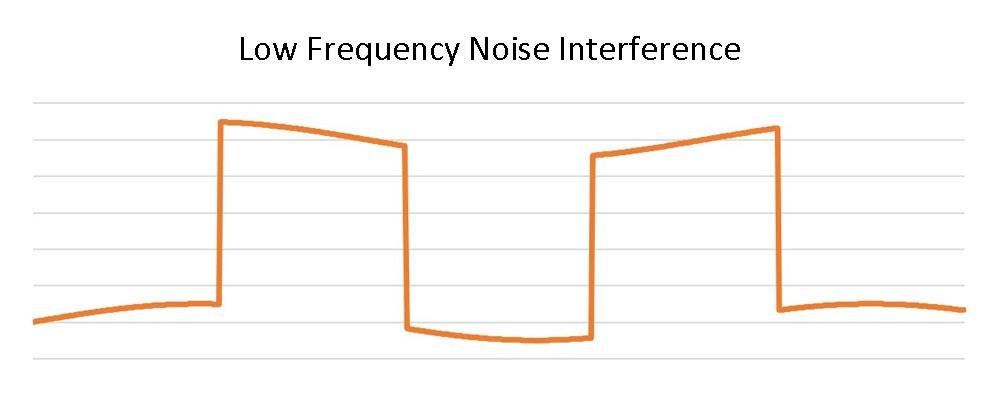

Noise below the bitrate will make the whole wave rise and fall.

The combined effect is illustrated below, and shows how timing errors are inevitable.

The challenge is to maximise bandwidth and minimise noise in order to minimise the distortion of the audio signal.

In an analog environment, electronic noise adds to the signal but does not necessarily change the sounds of reproduced voices and instruments because the noise is often heard as a separate sound. In digital, the combination of noise and bandwidth limitations will always produce some timing error, and this is not heard as noise, but is heard as changes to the reproduced sound of voices and instruments.

When someone claims that you can use any computer to do the music server task because it is just about 1s and 0s, they are completely right about the amplitude dimension, and completely wrong about the time dimension. While the music file tells the Music Server/Player the rate at which to play the file, the timing accuracy achieved varies with the quality of the design and implementation of the Music Server/Player and DAC. While digital audio makes the amplitude information very resilient, it also makes the timing information hyper-sensitive and hyper-critical to achieving a high-end audio result.

If only moderate fidelity is required, then the playback system can be rudimentary. The sound will be fairly clear and articulate, but the sounds of voices and instruments will be unnatural, and listener fatigue will set in quickly.

Getting the timing right is not only very hard to do very well, but is impossible to do perfectly. Like everything else in audio, getting ever closer to perfection is a never-ending challenge. What is good enough for you is a personal choice, and not something that the science can determine for you.

People sometimes make the observation that despite its weaknesses analog audio degrades more ‘gracefully’ than digital. In the decades since the first terrible-sounding CDs and CD Players, digital audio recording engineers and digital audio equipment manufacturers have made some big improvements. This is not because digital audio theory has been changing. It is because we are learning and discovering how to reduce the problems in digital audio, and we are learning about making their impact on music more ‘graceful’.

There are several parallels in the audio industry. For example, in the early days of solid state amplification, the differences from the best tube amplifiers were stark, but over many years the sound of great amplifiers, tube and solid state, has converged. The same thing is happening in the source world. Digital sources and analog sources still sound quite different from each other, but the sound of the best examples continues to converge through advances in design.